IBM today introduced the Power E1080 server, its first system powered by a Power10 IBM microprocessor. The new system reinforces IBM’s emphasis on hybrid cloud markets and the new chip beefs up its inference capabilities. IBM – like other CPU makers – is hoping to make inferencing a core capability of host CPUs and diminish the need for separate AI accelerators. IBM’s Power8 and Power9 were usually paired with Nvidia GPUs to deliver AI (and HPC) capabilities.

“When we were designing the E1080, we had to be cognizant of how the pandemic was changing not only consumer behavior, but also our customers’ behavior and needs from their IT infrastructure,” said Dylan Boday, vice president of product management for AI and hybrid cloud, in the official announcement. “The E1080 is IBM’s first system designed from the silicon up for hybrid cloud environments, a system tailor-built to serve as the foundation for our vision of a dynamic and secure, frictionless hybrid cloud experience.”

Few details about the Power10 chip were discussed, nor was a more detailed spec sheet for the Power E1080 presented at an analyst/press pre-briefing last week. IBM instead chose to cite new key functional capabilities that blended the boundary between system and chip and to highlight favorable benchmarks. General availability for E1080 is scheduled for later this month. No timetable was given for direct sales (if any) of the Power10 chips.

Here are the highlights as reported by IBM:

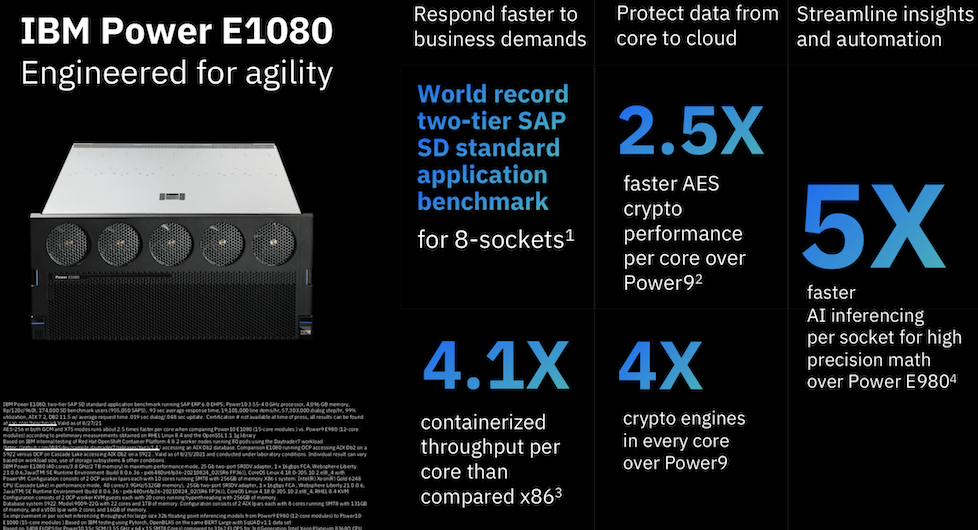

- Enhancements for hybrid cloud such as by the minute metering of Red Hat software including Red Hat OpenShift and Red Hat Enterprise Linux, 4.1x greater OpenShift containerized throughput per core vs x86-based servers, and “architectural consistency and cloud-like flexibility across the entire hybrid cloud environment to drive agility and improve costs without application refactoring.”

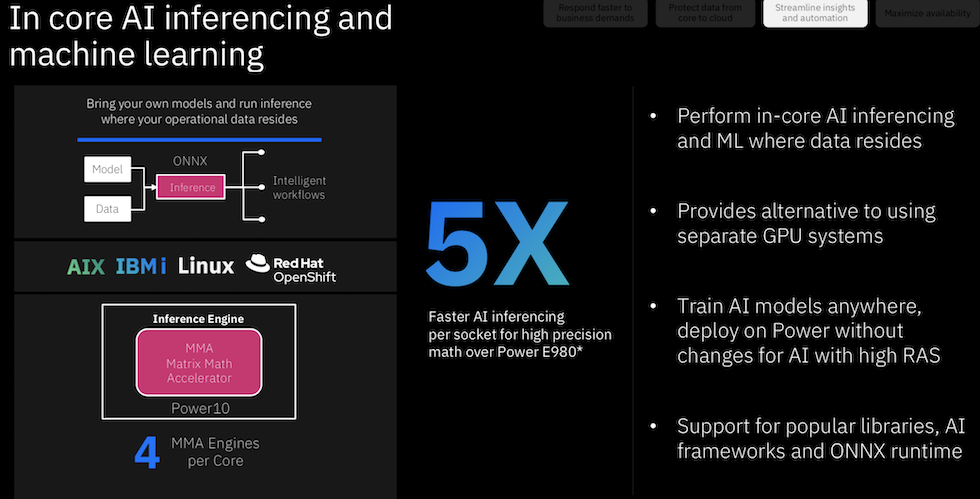

- New hardware-driven performance improvements that deliver up to 50 percent more performance and scalability than its predecessor the [Power9-based] IBM Power E980, while also reducing energy use and carbon footprint of the E980. The E1080 also features four matrix math accelerators per core, enabling 5x faster inference performance as compared to the E980.

- New security tools designed for hybrid cloud environments including transparent memory encryption “so there is no additional management setup,” 4x the encryption engines per core, allowing for 2.5x faster AES encryption as compared to the IBM Power E980, and “security software for every level of the system stack.”

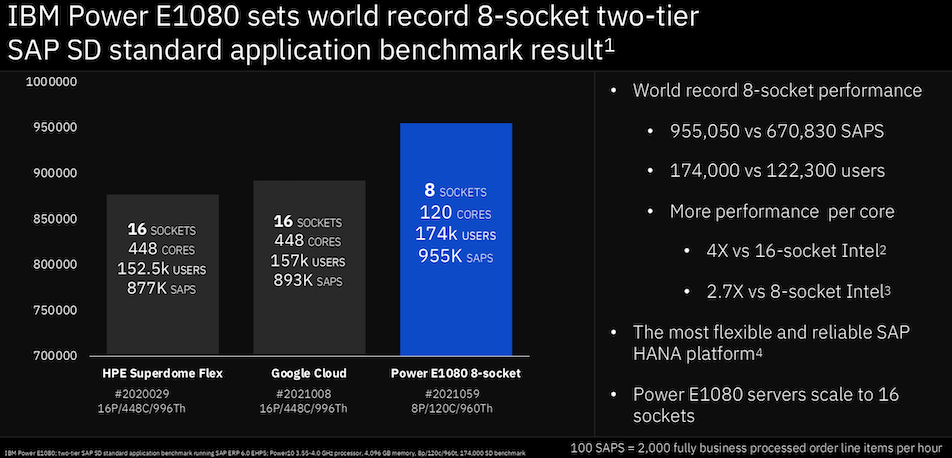

- Robust ecosystem of ISVs, Business Partners, and support to broaden the capabilities of the IBM Power E1080 and how customers can build their hybrid cloud environment, including record-setting performance for SAP applications in an 8-socket system. IBM is also launching a new tiered Power Expert Care service to help clients as they protect their systems against the latest cybersecurity threats while also providing hardware and software coherence and higher systems availability.”

In recent years IBM’s positioning of its Power platforms and Power CPU line has significantly transitioned from HPC-centricity to enterprise-centricity with a distinct hybrid cloud focus. Introduction of the E1080 server seems to complete the journey. Standing the Summit supercomputer (at ORNL) in 2018 based on IBM’s AC922 nodes with Power9 CPUs was probably the high-water mark for IBM HPC. Summit was the fastest supercomputer in the world for a couple cycles of the Top500.

However, Power9-based IBM systems achieved lackluster traction in the broader HPC market. IBM shifted gears gears a few times trying to find the right fit. The IBM purchase of Red Hat for $34 billion in 2019 marked a massive shift for IBM strategy to the cloud and stirred uncertainty about IBM’s plans for Power-based platforms and its role in the OpenPOWER Foundation. The integration of E1080 server line into IBM’s hybrid-cloud strategy now seems to remove the ambiguity surrounding IBM plans for the IBM Power product line.

Patrick Moorhead, founder and president of Moor Insights & Strategy, noted, “IBM has changed its focus on Power over the past few years. Power10 is focused on enterprise big data and AI inference workloads delivered in a secure, hybrid cloud model. It looks to really scream on SAP, Oracle, and OpenShift environments when compared to Cascade Lake. The performance numbers IBM touted make sense given the chip’s architecture.”

“On-chip ML inference makes lots of sense when latency is of the upmost importance and being off-chip versus going through PCIe delivers just that in an open (ONNX supported) way. Some enterprises will even train models on these systems if they’re underutilized,” added Moore who said he thought IBM could gain traction, “if it aggressively markets and sells these systems against x86-systems. … I’d say the past few generations were marketed and sold to current clients as replacements for older IBM systems versus ‘going after’ Intel.”

Analyst Peter Rutten of IDC also thinks IBM’s E1080 is a good move. “Keep in mind that this is the 8- or 16-socket enterprise class system that runs AIX first and foremost, as well as OS i and Linux. This is IBM’s transactional/analytics processing system that offers 99.999% availability, high security, and a lot of performance for such traditional workloads as database. The new chip offers several benefits for this system – higher performance (versus Power9), less energy, more bandwidth, lower latency, greater security with baked-in encryption, and the MMA for AI inferencing on the chip, which is something enterprises increasingly want to be able to do on their traditional workloads. The way I see it, IBM hit sort of a sweet spot with this system.”

Rutten also doesn’t think IBM is easing out of HPC and AI. “I don’t see this as IBM meandering, but as parallel tracks. There is the scale-out portfolio that is all Linux and that’s focused on AI training, HPC, big data analytics. These are the one- and two-socket systems that include the AC922 which was used for Summit. IBM didn’t win the latest supercomputer RFPs but they revealed some very interesting features with Power10 for those workloads. The E1080 is based on a single chip module. But forthcoming is a Dual-Chip Module (DCM), which takes two Power10 chips and puts them (1200 mm2 combined) into the same form factor where there used to be just one Power9 processor. This DCM is targeting compute-dense, energy-dense, volumetric space-dense cloud-type configurations with systems ranging from 1 to 4 sockets. I think we’re going to see some screaming performance from these systems when they arrive.”

Not a lot was said about the specific Power10 chip inside the E1080 at IBM’s pre-launch briefing.

Responding to a question about the physical components of the system and new chip during Q&A at the briefing, Boday said, “The E1080 will scale to 240 cores in the entire system itself. The Power10 processor will have 15 cores, the prior generation (Power9-E980) was maxed out at 12. That allows us to scale up to the 240 cores (16 Power10s). We’re also improving the overall number of DIMM slots to where we can actually do 256 DDR4 DIMMs in the system. The overall memory bandwidth [is increased] to over 400 gigs per second, per socket. We’ve introduced a Gen5 PCIe slots and we’re allowing you to connect all of this together, [the] individual drawers and nodes of the system through a faster fabric that we call our SMP fabric.”

Ken King, general manager of IBM Power, “You will see more announcements coming later this year for more of our Power10 family coming to the market, and we’ll be rolling additional ones into early 2022 as well.” IBM disclosed last year that Power10 would be its first 7nm process part and was being fabbed by Samsung.

A fuller picture of the Power10 chip lineup and associated systems will emerge over the next few months. One interesting point is the inclusion on-chip inference capability. At the briefing, Satya Sharma, IBM fellow and CTO of IBM Power, emphasized the practice of “not requiring exotic accelerators” is a growing trend in the market. Indeed, IBM showcased such capabilities in its new Z series chip (Telum) at the recent Hot Chips conference. Intel has also announced plans to incorporate similar capabilities in its Sapphire Rapids CPU (Intel’s next-generation “Intel 7” processor).

Given IBM’s newer focus on adding inferencing capabilities to Power10, it would be interesting to see how the E1080 fares on the MLPerf inferencing competition. Boday was non-committal and said, “We’re excited about the number of MM (matrix multiply) engines per core that Power10 delivers and how those are going to be very advantageous. As we continue to build out those benchmarks, such as MLPerf, those are things that will be on the radar for us to deliver.” (See HPCwire article on IBM Power10 presentation last year at Hot Chips 2020.)

Mostly IBM stuck tightly to a script touting the new system’s functionality and favorable benchmarks versus physical specs. IBM is strongly promoting E1080’s security features and tools. The entire memory is encrypted, with no performance penalty or management set-up, said Satya Sharma, IBM fellow and CTO of IBM Power.

As an example, he said, “We are providing Forex crypto engines in every core. As a result, customers can get 2.5x more crypto performance. [Using this engine], you can do either end-to-end decryption or encryption. Or you can do [this] file systems or databases or applications. You can go from server all the way to network to the storage. With this crypto engine capability, you can implement full stack and end to end encryption.”

There is also a centralized dashboard for managing security on the E1080. “Customers can implement a number of different compliance automation tools [including] PCI, HIPAA-readiness, GDPR, and we would ensure that all of the servers in the server farm all comply with these security compliance profiles. At the same time, we are monitoring the entire server farm, if any of these servers go out of compliance,” added Sharma. He ticked through several security elements such as libraries of algorithms for so-called “post-quantum security” as well and isolation measure taken at the CPU and system level.

Certainly, SAP is a big factor in the enterprise market and IBM’s reported results again its own prior generation Power9 as well as x86 rivals will draw attention. It will be interesting to keep watching the Power10 family’s development and how many Power10 skus IBM ends up offering.

Analyst Shahin Kahn, of OrionX noted, “AI inference will be the tail that is wagging the Deep Learning dog. It is about infusing apps with AI models and feeding new data back to AI learning. So AI inference is a very large market attracting many new chip and system players. While increased focus on AI is to be expected, IBM’s innovations with memory really also stand out: Open Memory Interface, shared memory, large address space, memory bandwidth, memory clustering, and memory encryption are all very cool and very useful. In an interesting twist, Arm’s success helps expand the market for Power10 since developers who have already re-targeted their app once will find it a lot easier to do so a second or third time.”

Addison Snell, CEO of Intersect360 Research, thought IBM’s latest system and chip fit well into IBM’s expanding enterprise AI focus. “The Power E1080 is an interesting step in IBM’s continued focus on enterprise services and hybrid cloud. Power10 has features that would be useful in HPC, such as its Matrix Math Accelerator (MMA) engines, but IBM is focusing these exclusively on AI inference now—a whiplash-inducing abandonment of HPC since the installations of Summit and Sierra, which are still among the most powerful supercomputers in the world. For enterprise AI, it makes sense to move inferencing capabilities onto the CPU, and this will be part of a general trend among CPU providers,” said Snell.

Stay tuned.

Link to IBM announcement: https://www.hpcwire.com/off-the-wire/ibm-unveils-new-generation-of-ibm-power-servers-for-frictionless-scalable-hybrid-cloud/