Abstract

When studying the dynamics of trait distribution of populations in a heterogeneous environment, classical models from quantitative genetics choose to look at its system of moments, specifically the first two ones. Additionally, in order to close the resulting system of equations, they often assume the local trait distributions are Gaussian [see for instance Ronce and Kirkpatrick (Evolution 55(8):1520–1531, 2001. https://doi.org/10.1111/j.0014-3820.2001.tb00672.x.37)]. The aim of this paper is to introduce a mathematical framework that follows the whole trait distribution (without prior assumption) to study evolutionary dynamics of sexually reproducing populations. Specifically, it focuses on complex traits, whose inheritance can be encoded by the infinitesimal model of segregation (Fisher in Trans R Soc Edinb 52(2):399–433, 1919. https://doi.org/10.1017/S0080456800012163). We show that it allows us to derive a regime in which our model gives the same dynamics as when assuming Gaussian local trait distributions. To support that, we compare the stationary problems of the system of moments derived from our model with the one given in Ronce and Kirkpatrick (Evolution 55(8):1520–1531, 2001. https://doi.org/10.1111/j.0014-3820.2001.tb00672.x.37) and show that they are equivalent under this regime and do not need to be otherwise. Moreover, under this regime of equivalence, we show that a separation bewteen ecological and evolutionary time scales arises. A fast relaxation toward monomorphism allows us to reduce the complexity of the system of moments, using a slow-fast analysis. This reduction leads us to complete, still in this regime, the analytical description of the bistable asymmetrical equilibria numerically found in Ronce and Kirkpatrick (Evolution 55(8):1520–1531, 2001. https://doi.org/10.1111/j.0014-3820.2001.tb00672.x.37). More globally, we provide explicit modelling hypotheses that allow for such local adaptation patterns to occur.

Similar content being viewed by others

References

Akerman A, Bürger R (2014) The consequences of gene flow for local adaptation and differentiation: a two-locus two-deme model. J Math Biol 68(5):1135–1198. https://doi.org/10.1007/s00285-013-0660-z

Barton NH, Etheridge AM, Véber A (2017) The infinitesimal model: definition, derivation, and implications. Theor Popul Biol 118:50–73. https://doi.org/10.1016/j.tpb.2017.06.001

Bouin E et al (2018) Equilibria of quantitative genetics models beyond the Gaussian approximation I: maladaptation to a changing environment. (In preparation)

Bourgeron T et al (2017) Existence of recombination–selection equilibria for sexual populations. arXiv:1703.09078 [math, q-bio]

Bulmer MG (1971) The effect of selection on genetic variability. Am Nat 105(943):201–211

Bulmer MG (1980) The mathematical theory of quantitative genetics. Oxford University Press

Bürger R, Akerman A (2011) The effects of linkage and gene flow on local adaptation: a two-locus continent-island model. Theor Popul Biol 80(4):272–288. https://doi.org/10.1016/j.tpb.2011.07.002

Calvez V, Garnier J, Patout F (2019) Asymptotic analysis of a quantitative genetics model with nonlinear integral operator. J École Polytech 6:537–579. https://doi.org/10.5802/jep.100

Chicone C (1999) Ordinary differential equations with applications. Springer, Berlin

Day T (2000) Competition and the effect of spatial resource heterogeneity on evolutionary diversification. Am Nat 155(6):790–803

Débarre F, Ronce O, Gandon S (2013) Quantifying the effects of migration and mutation on adaptation and demography in spatially heterogeneous environments. J Evol Biol 26(6):1185–1202. https://doi.org/10.1111/jeb.12132

Débarre F, Yeaman S, Guillaume F (2015) Evolution of quantitative traits under a migration–selection balance: when does skew matter? Am Nat 186(S1):S37–S47. https://doi.org/10.1086/681717

Desvillettes L et al (2008) On selection dynamics for continuous structured populations. Commun Math Sci 6(3):729–747. https://doi.org/10.4310/CMS.2008.v6.n3.a10

Diekmann O et al (2005) The dynamics of adaptation: an illuminating example and a Hamilton–Jacobi approach. Theor Popul Biol 67(4):257–271. https://doi.org/10.1016/j.tpb.2004.12.003

Fisher RA (1919) The correlation between relatives on the supposition of mendelian inheritance. Trans R Soc Edinb 52(2):399–433. https://doi.org/10.1017/S0080456800012163

Galton F (1877) Typical laws of heredity 1. Nature 15:492–495

Hendry AP, Day T, Taylor EB (2001) Population mixing and the adaptive divergence of quantitative traits in discrete populations: a theoretical framework for empirical tests. Evolution 55(3):459–466. https://doi.org/10.1111/j.0014-3820.2001.tb00780.x

Lange K (1978) Central limit theorems of pedigrees. J Math Biol 6(1):59–66. https://doi.org/10.1007/BF02478517

Lavigne F et al (2019) When sinks become sources: adaptive colonization in asexuals. bioRxiv https://doi.org/10.1101/433235. https://www.biorxiv.org/content/early/2019/05/03/433235

Levin JJ, Levinson N (1954) Singular perturbations of non-linear systems of differential equations and an associated boundary layer equation. J Ration Mech Anal 3:247–270

Lythgoe KA (1997) Consequences of gene flow in spatially structured populations. Genet Res 69(1):49–60. https://doi.org/10.1017/S0016672397002644

Magal P, Webb GF (2000) Mutation, selection, and recombination in a model of phenotype evolution. Discrete Contin Dyn Syst A 6(1):221–236. https://doi.org/10.3934/dcds.2000.6.221

Meszéna G, Czibula I, Geritz S (1997) Adaptive dynamics in a 2-patch environment: a toy model for allopatric and parapatric speciation. J Biol Syst 05(02):265–284. https://doi.org/10.1142/S0218339097000175

Mirrahimi S (2017) A Hamilton–Jacobi approach to characterize the evolutionary equilibria in heterogeneous environments. Math Models Methods Appl Sci 27(13):2425–2460. https://doi.org/10.1142/s0218202517500488

Mirrahimi S, Gandon S (2020) Evolution of specialization in heterogeneous environments: equilibrium between selection, mutation and migration. Genetics 214(2):479–491. https://doi.org/10.1534/genetics.119.302868

Mirrahimi S, Raoul G (2013) Dynamics of sexual populations structured by a space variable and a phenotypical trait. Theor Popul Biol 84:87–103. https://doi.org/10.1016/j.tpb.2012.12.003

Nagylaki T, Lou Y (2001) Patterns of multiallelic polymorphism maintained by migration and selection. Theor Popul Biol 59(4):297–313. https://doi.org/10.1006/tpbi.2001.1526

Perthame B, Barles G (2008) Dirac concentrations in Lotka–Volterra parabolic PDEs. Indiana Univ Math J 57(7):3275–3302. https://doi.org/10.1512/iumj.2008.57.3398

Raoul G (2017) Macroscopic limit from a structured population model to the Kirkpatrick–Barton model. arXiv:1706.04094 [math]

Ronce O, Kirkpatrick M (2001) When sources become sinks: migrational meltdown in heterogeneous habitats. Evolution 55(8):1520–1531. https://doi.org/10.1111/j.0014-3820.2001.tb00672.x.37

Tufto J (2000) Quantitative genetic models for the balance between migration and stabilizing selection. Genet Res 76(3):285–293. https://doi.org/10.1017/S0016672300004742

Turelli M (2017) Commentary: Fisher’s infinitesimal model: a story for the ages. Theor Popul Biol 118:46–49. https://doi.org/10.1016/j.tpb.2017.09.003

Turelli M, Barton NH (1994) Genetic and statistical analyses of strong selection on PolygenicTraits: what, me normal? Genetics 138:913–941. https://doi.org/10.1093/genetics/138.3.913

Yeaman S, Guillaume F (2009) Predicting adaptation under migration load: the role of genetic skew. Evolution 63(11):2926–2938. https://doi.org/10.1111/j.1558-5646.2009.00773.x

Acknowledgements

The author thanks Vincent Calvez and Sepideh Mirrahimi for supervising this project and Sarah P. Otto for precise and helpful comments. The author also thanks Ophélie Ronce, Florence Débarre, Amandine Véber, Alison Etheridge and Florian Patout for insightful conversations. The author thanks Gael Raoul and two anonymous reviewers for valuable comments that improved the manuscript. The author has received partial funding from the ANR project DEEV ANR-20-CE40-0011-01 and from a Mitacs Globalink Research Award. This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programm (Grant Agreement No. 639638).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The codes to reproduce the figures of this article are available at https://github.com/ldekens/Evolutionary-dynamics-of-complex-traits-in-sexual-populationsin-a-heterogeneous-environment

Appendices

Appendices

A System of moments derived from our model

Here, we derive the system of moments (2) from (1). In the preliminary computations, we will omit the time and deme dependency for the sake of clarity. We will then denote \(\varvec{n}\) the trait distribution density, \(\varvec{N}\) the size of the population, \(\varvec{\overline{z}}\) the mean trait, \(\varvec{\sigma }^2\) the mean variance, \(\varvec{\psi }^3\) the third central moment and \(\varvec{\theta }\) the optimal phenotype.

Preliminary integration of the selection term We have:

and:

Size of the subpopulations Recalling that \(\varvec{N_i}(\varvec{t}) = \displaystyle \int _\mathbb {R}\varvec{n_i}(\varvec{t},\varvec{z})\,d\varvec{z}\), we get from the preliminary computations by integrating (1):

Local mean trait Recalling that \(\varvec{\overline{z}_{i}}(\varvec{t}) =\frac{1}{\varvec{N_{i}}(\varvec{t})}\displaystyle \int _\mathbb {R}\varvec{z}\,\varvec{n_i}(\varvec{t},\varvec{z})\,d\varvec{z}\), we have, thanks to the preliminary computations:

B Equilibria of a dynamical system under the infinitesimal model of reproduction with random mating only

In this subsection, we show that (7) admits any Gaussian of variance \(\varepsilon ^2\) as equilibrium. That is equivalent to state that:

Proposition B.1

For \(\mu \in \mathbb {R}\), the Gaussian distribution \(G_{\mu ,\varepsilon ^2}\) of mean \(\mu \) and variance \(\varepsilon ^2\) is a fixed point of the operator \(\mathcal {B}_\varepsilon \), namely:

Proof

We can first notice that \({\mathcal {B}_\varepsilon }\) can be written using a double convolution product: \(\square \)

Lemma 10

For \(f \in \mathcal {L}_1(\mathbb {R}), \displaystyle \int _\mathbb {R}{f} \ne 0\), we have:

where \(F: z \mapsto f(2z)\).

Proof

(Proof of Lemma 10) For \(f \in \mathcal {L}_1(\mathbb {R}), \displaystyle \int _\mathbb {R}f \ne 0\), a straight-forward computation yields:

\(\square \)

If \(f = G_{\mu ,\varepsilon ^2}\), then we find \(F = \frac{1}{2}\times G_{\frac{\mu }{2},\frac{\varepsilon ^2}{4}}\). Besides, as the convolution product of two Gaussian kernels \(G_{\mu _1,\sigma ^2_1}\) and \(G_{\mu _2,\sigma ^2_2}\) is the Gaussian kernel \(G_{\mu _1+\mu _2,\sigma _1 ^2+\sigma _2 ^2}\), Proposition B.1 is a corollary of the previous lemma.

C Formal expansion within the exponential formalism for \(n_{\varepsilon }\)

In this subsection, we will remove the deme dependency for the sake of clarity. To formally derive (9), let us consider the following formal expansion of \(U_\varepsilon \) with regard to successive orders of \(\varepsilon ^2\):

The aim is to characterize \(u_0\) thanks to the behaviour of the reproduction term when \(\varepsilon \ll 1\), which we expect neither to diverge nor to vanish:

Then, we have several considerations to make. First, if we assume that \(u_{0}\) reaches its minimum at a non degenerate point \(z^*\), then the following modified expression of the denominator:

will have its integrand concentrate around the minimum of \(u_{0}\) and will converge as \(\varepsilon \ll 1\). Therefore it is relevant to introduce this minimum both at the numerator and the denominator.

Then, since we expect the numerator not to diverge nor to vanish uniformly as \(\varepsilon \ll 1\), we need that:

As shown in Bouin et al. (2018), thanks to some convexity arguments, this leads necessarily to choose \(u_{0}\) as a quadratic function in z, hence its decomposition:

where \(z^*\) is realizing the minimum of \(u_{0}\). Note that \(u(z^*) = 0\), due to the Laplace method of integration, since:

So either \(u(z^*)=0\), either there is extinction or explosion of the population size. That yields (9).

Convexity arguments from Bouin et al. (2018). Let us recall the arguments of convexity involved in Bouin et al. (2018) to show that functional constraint (27) leads in our case to \(u_0\) being quadratic:

-

1.

First, they show that \(u_0\) has some regularities (continuous and has left and right derivative everywhere), for (27) implies that \(z\mapsto u_0(z) -z^2\) is concave as minimum of affine functions:

$$\begin{aligned} \forall z\in \mathbb {R},\quad u_0(z) -z^2 = \underset{(z_1,z_2)}{\min }\left[ -z(z_1+z_2) + \frac{\left( z_1+z_2\right) ^2}{4} + u_{0}(z_1) + u_{0}(z_2) \right] . \end{aligned}$$ -

2.

Next, they introduce the Legendre convex conjugate

$$\begin{aligned}\hat{u_0} : p \mapsto \underset{z\in \mathbb {R}}{\sup }\left[ (z-z^*)p -u(z)\right] ,\end{aligned}$$and show that it satisfies the following functional equality, by commuting the different \(\sup \) operators while computing \(\hat{u}_0(p)\) using (27):

$$\begin{aligned} \forall p \in \mathbb {R},\quad \hat{u_0}(p) = \frac{p^2}{4}+ 2\,\hat{u_0}\left( \frac{p}{2}\right) . \end{aligned}$$(29) -

3.

As \(\hat{u_0}\) is convex by definition, it is continuous and admits left and right derivative everywhere. Moreover, \(\hat{u_0}\) has a minimum in 0 and \(\hat{u_0}(0) = -u(z^*) = 0\). Therefore (29) implies by recursion:

$$\begin{aligned} \forall p >0\quad (\text {resp.} <0), \quad \hat{u}_0(p) = \frac{p^2}{2}+ \hat{u_0}'(0^+)\,p \quad (\text {resp.} \;\hat{u_0}'(0^-)\,p). \end{aligned}$$(30)Note that 0 being a minimum of \(\hat{u}_0\) implies that: \(\hat{u_0}'(0^-)\le 0\le \hat{u_0}'(0^+)\).

-

4.

The next step aims at showing that \(u_0\) is equal to its convex bi-conjugate

$$\begin{aligned} \hat{\hat{u_0}} : z \mapsto \underset{p\in \mathbb {R}}{\sup }\left[ p\,(z-z^*) -\hat{u_0}(p)\right] , \end{aligned}$$which is computable from (30):

$$\begin{aligned} \hat{\hat{u_0}} : z\mapsto \begin{aligned} {\left\{ \begin{array}{ll} \frac{(z-z^*-\hat{u}_0(0^-))^2}{2}\text { if }z<z^*+\hat{u}_0(0^-)\\ 0\qquad \quad \text { if }z^*+\hat{u}_0(0^-)\le z \le z^*+\hat{u}_0(0^+)\\ \frac{(z-z^*-\hat{u}_0(0^+))^2}{2}\text { if }z>z^*+\hat{u}_0(0^+). \end{array}\right. } \end{aligned} \end{aligned}$$(31)Standard convexity analysis shows also that \(\hat{\hat{u_0}}\) is the lower convex envelope of \(u_0\). The first implication is that \(u_0\) and \(\hat{\hat{u_0}}\) coincide on \(\mathbb {R}\backslash [z^*+\hat{u}_0(0^-),z^*+\hat{u}_0(0^+)]\), because \(\hat{\hat{u_0}}\) is strictly convex there. The second implication is that \(u_0\left( z^*+\hat{u}_0(0^+)\right) = \hat{\hat{u}}_0\left( z^*+\hat{u}_0(0^+)\right) =0\) (resp. \(z^*+\hat{u}_0(0^-)\)), since \(z^*+\hat{u}_0(0^+)\) (resp. \(z^*+\hat{u}_0(0^-)\)) is an extremal point of the graph of \(\hat{\hat{u}}_0\). One can show using (27) that the midpoint between any zeros of \(u_0\) is still a zero of \(u_0\) (recall that \(u_0\ge 0\)). Hence, by density and continuity of \(u_0\), \(u_0\) vanishes on \([z^*+\hat{u}_0(0^-),z^*+\hat{u}_0(0^+)]\).

-

5.

Finally, since \(u_0\) satisfies (31) and we need \(N_\varepsilon \) not to explode when \(\varepsilon \) vanishes, we necessarily obtain that \(\hat{u}_0(0^-) = \hat{u}_0(0^+)\). Hence \(u_0\) quadratic.

D Formal approximations of the trait distributions moments in the regime of small variance \(\varepsilon ^2 \ll 1\)

This appendix is dedicated to formally explain (12). We remove the time and the deme dependency for the sake of clarity. We denote \(n_\varepsilon \) the trait distribution density, \(N_\varepsilon \) the size of the population, \(\overline{z}_\varepsilon \) the mean trait, \(\sigma _\varepsilon ^2\) the variance and \(\psi _\varepsilon \) the third central moment. Let us also recall that the computations are performed using the exponential formalism introduced in (10) while considering the following formal expansion of \(u_\varepsilon \) in the regime of small variance:

Size of population We have:

from the computations of the moments of a Gaussian.

Mean trait Similarly as above, we have:

Variance Using the previous formal computations and methodology, we get:

Third central moment We compute, using the same change in variable \(y:= \frac{z-z^*}{\varepsilon }\):

E Fast/slow system: proof of Theorem 3.1

This appendix is dedicated to prove Theorem 3.1.

Let \((z^*_0,\bar{Y}^*_0)\in \mathbb {R}\times \Omega \) (we recall that \(\Omega =(\mathbb {R}_+^*)^2\times \mathbb {R}\)) be on the slow manifold, ie. such that \({G(z^*_0,\bar{Y}^*_0)} = 0\). From Lemma 6 of fast relaxation towards the slow manifold, the jacobian matrix \(J_G(z^*_0,\bar{Y}^*_0)\) is invertible. Consequently, the implicit function theorem gives us U open neighbourhood of \(z^*_0\) in \(\mathbb {R}\), V open neighbourhood of \((z^*_0,\bar{Y}^*_0)\) in \(\mathbb {R}\times \Omega \) and \(\phi \in C^\infty (U,V)\) such that :

Hence, we can define a notation that we shall use henceforth:

If K is a compact subset of U such that \(z^*_0 \in \mathring{K}\), we can define the Cauchy problem \((E_0)\) by the following :

for \(t\le t^*\), that we define as the following:

It is similar to (20) with the initial conditions \((z^*(0),\bar{Y}^*_0) = (z^*_0,\phi (z^*_0))\). A essential part of the proof relies in the fact that we can define the following uniform positive constant, thanks to Lemma 6 of fast relaxation:

As the first step, we state the following lemma whose proof will be provided at the end of this appendix. It defines a uniform control constant \(\gamma >0\):

Lemma 11

There exists \(\gamma >0\) such that:

\(({\left| \left| \left| \cdot \right| \right| \right| }_{\mathcal {M}_3(\mathbb {R})}\) is noted \({\left| \left| \left| \cdot \right| \right| \right| })\).

The next step is to show the convergence of solutions of \((P_\varepsilon )\) (19) towards those of \((P_0)\) (20) on a time interval, yet to be defined, that will be shown to be uniform with regard to \(\varepsilon \) and the initial conditions, provided that they are small enough. For that purpose, it is more convenient to consider the system \((R_\varepsilon )\) verified by the residuals \(r^\varepsilon _z(t) = z_\varepsilon (t)-z^*(t)\) and \(r^\varepsilon _Y(t) = \bar{Y}_\varepsilon (t)-\bar{Y}^*(t)\):

and introduce some further definitions.

Because K is a compact set, there exists \(\delta _K>0\) such that the following set is a compact subset of V:

Let us consider from now \((z^\varepsilon _0,N^\varepsilon _0) \in \bar{K}_{\delta _K}\). Then we define \(\Delta = \min \left( \frac{\lambda _K}{4C\gamma },\delta _K\right) \) and \(T=\min (t^*,\frac{\lambda _K}{4C'\gamma })\), where:

and :

where \(\Pi _\Omega \) is the projection from \(\mathbb {R}\times \Omega \) on \(\Omega \). One can notice from these definitions and from Lemma 11, that \(\gamma , \Delta , T, \lambda _K, C, C'\) do not depend on \(\varepsilon \) and are uniform on \([0,t^*]\). Specifically taking \(\Delta \le \frac{\lambda _K}{4C\gamma }\) and \(T\le \frac{\lambda _K}{4C'\gamma }\) will turn out to be important in the proof.

On the time region [0, T], we will show that we can control explicitly the various perturbed terms that arise. We can now state the following proposition, whose proof constitutes the core of the resolution of the problem:

Proposition E.1

As \(\max (\varepsilon ,|r^\varepsilon _z(0)|,|r^\varepsilon _Y(0)|) \rightarrow 0\), \((\bar{Y}_\varepsilon ,z_\varepsilon )\) converges toward \((\bar{Y}^*,z^*)\) uniformly on [0, T].

For the final step, we will show that we can reiterate the process on each interval of time \([jT,\min \{(j+1)T,t^*\}]\) with \(\forall j \le \lfloor \frac{t^*}{T}\rfloor , jT\le t^*_\varepsilon \). Thus, for sufficiently small \(\varepsilon \) and initial conditions, the control remains valid until \(t^*\), hence Theorem 3.1.

For convenience, we will denote by \(f*g\,(t)\) the convolution product of f and g at time \(t>0\) :

Proof

(Proof of Proposition E.1)

Let \(\varepsilon \in ]0,1]\). Let us define an auxiliary time \(t^*_\varepsilon \):

It ensures that the perturbed trajectory stays inside of \(\bar{K}_{\delta _K}\) when \(t\le t^*_\varepsilon \).

Let us highlight the main steps of the proof:

-

1.

preliminary controls on \(r^\varepsilon _Y\) by \(|r^\varepsilon _Y(0)|\) and \(\frac{1}{\varepsilon ^2}|r^\varepsilon _z|*e^{-\frac{\lambda _K}{2\varepsilon ^2}\cdot }\) thanks to the regularity of G, the fast relaxation properties (Lemma 6 and Lemma 11) and Gronwall’s lemma.

-

2.

control \(|r^\varepsilon _z|\) by \(|r^\varepsilon _z(0)|\) and \(|r^\varepsilon _Y|\).

-

3.

finish the control on \(r^\varepsilon _Y\) by using the latter and Gronwall’s lemma.

-

1.

For \(t\le \min (T,t^*_\varepsilon )\), we can introduce new terms in the equation from (33) on \(r^\varepsilon _Y\) :

$$\begin{aligned} \frac{dr^\varepsilon _Y}{dt}&=\frac{J_{z^*(0)}}{\varepsilon ^2}r^\varepsilon _Y+\frac{1}{\varepsilon ^2}\left[ {G(z^*(t),\bar{Y}^*(t)+r^\varepsilon _Y(t))-G(z^*(t),\bar{Y}^*(t))}-J_{z^*(0)}r^\varepsilon _Y\right] \\&\quad + \frac{1}{\varepsilon ^2}\left[ {G(z^*(t)+r^\varepsilon _z(t),\bar{Y}^*(t)+r^\varepsilon _Y(t))-G(z^*(t),\bar{Y}^*(t)+r^\varepsilon _Y(t))}\right] \\ {}&\quad -\phi '(z^*(t))(-2gz^*(t)+F(\phi (z^*(t))))+\nu _{N,\varepsilon }(t)\\ \\&= \frac{J_{z^*(0)}}{\varepsilon ^2}r^\varepsilon _Y +A_1(t)+A_2(t)+A_3(t). \end{aligned}$$Since \(t\le \min (T,t^*_\varepsilon )\) and G is \(C^\infty \) on \(\bar{K}_{\delta _K}\times [0,1]\), we can control \(A_1\):

$$\begin{aligned} |A_1(t)|\le & {} \frac{1}{\varepsilon ^2}\left[ |{G(z^*(t),\bar{Y}^*(t)+r^\varepsilon _Y(t))-G(z^*(t),\bar{Y}^*(t)}-J_{z^*(t)}r^\varepsilon _Y|\right] \\&+\frac{1}{\varepsilon ^2}\left[ {\left| \left| \left| J_{z^*(t)}-J_{z^*(0)} \right| \right| \right| }\,|r^\varepsilon _Y(t)|\right] \\\le & {} \frac{1}{\varepsilon ^2}\left[ \Vert \partial ^2_{\bar{Y}}G\Vert _ {\infty ,{\bar{K}_{\delta _K}}}\,|r^\varepsilon _Y(t)|^2+T\,\underset{t\le t^*}{\max }{\left| \left| \left| \partial _t J_{z^*(t)} \right| \right| \right| }\,|r^\varepsilon _Y(t)|\right] \\\le & {} \frac{1}{\varepsilon ^2} (C\Delta +C'T) |r^\varepsilon _Y(t)|, \end{aligned}$$and \(A_2\):

$$\begin{aligned} |A_2(t)|= & {} \frac{1}{\varepsilon ^2}|{G(z^*(t)+r^\varepsilon _z(t),\bar{Y}^*(t)+r^\varepsilon _Y(t))}-{G(z^*(t),\bar{Y}^*(t)+r^\varepsilon _Y(t))}|\\ {}\le & {} \frac{1}{\varepsilon ^2}{\Vert \partial _z G\Vert _ {\infty ,{\bar{K}_{\delta _K}}}\,|r^\varepsilon _z(t)|\le \frac{C}{\varepsilon ^2}|r^\varepsilon _z(t)|}, \end{aligned}$$and \(A_3\):

$$\begin{aligned} |A_3(t)| = |-\phi '(z^*(t))(-2gz^*(t)+F(\phi (z^*(t))))+\nu _{N,\varepsilon }(t)|\le C'', \end{aligned}$$for some constant \(C''\) independent of \(\varepsilon \) and \(z^*(0)\in K\). Using Duhamel formulas, we get, for \(t\le \min (T,t^*_\varepsilon )\):

$$\begin{aligned} r^\varepsilon _Y(t) = e^{\frac{J_{z^*(0)}t}{\varepsilon ^2}}r^\varepsilon _Y(0) + \left[ e^{\frac{J_{z^*(0)}\cdot }{\varepsilon ^2}} *(A_1+A_2+A_3) \right] \,(t). \end{aligned}$$(34)Hence, applying Lemma 11 yields:

$$\begin{aligned} |r^\varepsilon _Y(t)|&\le \gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _Kt}{\varepsilon ^2}}+\frac{\gamma }{\varepsilon ^2}\left[ \left( C|r^\varepsilon _z|+(C\Delta +C'T)|r^\varepsilon _Y|\right) *e^{-\frac{\lambda _K}{\varepsilon ^2}\cdot }\right] (t) \\&\quad + \varepsilon ^2\gamma \frac{{C''}}{\lambda _K}\\&\le A^{r^\varepsilon _z}(t) + \frac{\gamma (C\Delta +C'T)}{\varepsilon ^2}\int _0^t|r^\varepsilon _Y(\tau )|\,e^{\frac{\lambda _K}{\varepsilon ^2}(\tau -t)}d\tau , \end{aligned}$$where \(A^{r^\varepsilon _z} (t) := \gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _Kt}{\varepsilon ^2}}+\frac{\gamma C}{\varepsilon ^2}\left( |r^\varepsilon _z|*e^{-\frac{\lambda _K}{\varepsilon ^2}\cdot }\right) (t) + \varepsilon ^2\gamma \frac{{C''}}{\lambda _K}\). Applying Gronwall inequality to \(r^\varepsilon _Y(t)e^{\frac{\lambda _Kt}{\varepsilon ^2}}\) yields:

$$\begin{aligned} |r^\varepsilon _Y(t)|\le A^{r^\varepsilon _z}(t) + \frac{\gamma (C\Delta +C'T)}{\varepsilon ^2}\left[ A^{r^\varepsilon _z}*e^{\left( \frac{-\lambda _K}{\varepsilon ^2}+\frac{\gamma (C\Delta +C'T)}{\varepsilon ^2}\right) \cdot }\right] (t). \end{aligned}$$(35)Having fixed \(\Delta \le \frac{\lambda _K}{4C\gamma }\) and \(T\le \frac{\lambda _K}{4C'\gamma }\) in the preliminaries ensures that \(e^{\left( \frac{-\lambda _K}{\varepsilon ^2}+\frac{\gamma (C\Delta +C'T)}{\varepsilon ^2}\right) \cdot }\) defines a negative exponential term, that we can dominate by \(e^{-\frac{\lambda _K}{2\varepsilon ^2} \cdot }\). Hence:

$$\begin{aligned} |r^\varepsilon _Y(t)|\le A^{r^\varepsilon _z}(t) + \left[ A^{r^\varepsilon _z}*\frac{\lambda _K}{2\varepsilon ^2}e^{-\frac{\lambda _K}{2\varepsilon ^2} \cdot }\right] (t). \end{aligned}$$(36)Making \(A^{r^\varepsilon _z}\) explicit gives:

$$\begin{aligned}&|r^\varepsilon _Y(t)|\le \gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _Kt}{\varepsilon ^2}}+\frac{\gamma C}{\varepsilon ^2}|r^\varepsilon _z|*e^{-\frac{\lambda _K}{\varepsilon ^2}\cdot }(t) + \varepsilon ^2\gamma \frac{{C''}}{\lambda _K}\nonumber \\&\qquad + \left[ \left( \gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _K}{\varepsilon ^2}\cdot }+\frac{\gamma C}{\varepsilon ^2}\left[ |r^\varepsilon _z|*e^{-\frac{\lambda _K}{\varepsilon ^2}\cdot }\right] + \varepsilon ^2\gamma \frac{{C''}}{\lambda _K} \right) *\left( \frac{\lambda _K}{2\varepsilon ^2}e^{-\frac{\lambda _K}{2\varepsilon ^2} \cdot }\right) \right] (t)\nonumber \\&\quad \le \gamma |r^\varepsilon _Y(0)|\left[ e^{-\frac{\lambda _Kt}{\varepsilon ^2}}+ e^{-\frac{\lambda _K}{\varepsilon ^2}\cdot }*\left( \frac{\lambda _K}{2\varepsilon ^2}e^{-\frac{\lambda _K}{2\varepsilon ^2} \cdot }\right) (t)\right] +\varepsilon ^2\gamma \frac{{C''}}{\lambda _K}(\left( 1+\int _0^t\frac{\lambda _K}{2\varepsilon ^2}e^{-\frac{\lambda _K}{2\varepsilon ^2}(\tau -t)}dt\right) \nonumber \\&\qquad +\frac{\gamma C}{\varepsilon ^2}|r^\varepsilon _z|*\left( e^{-\frac{\lambda _K}{\varepsilon ^2}\cdot }+ e^{-\frac{\lambda _K}{\varepsilon ^2\cdot }}*\frac{\lambda _K}{2\varepsilon ^2}e^{-\frac{\lambda _K}{2\varepsilon ^2} \cdot }\right) (t), \end{aligned}$$(37)thanks to the associativity of the convolution product. One can compute that, for \(t\ge 0\):

$$\begin{aligned} e^{-\frac{\lambda _Kt}{\varepsilon ^2}}+e^{-\frac{\lambda _K}{\varepsilon ^2}\cdot }*\left( \frac{\lambda _K}{2\varepsilon ^2}e^{-\frac{\lambda _K}{2\varepsilon ^2} \cdot }\right) (t)&= e^{-\frac{\lambda _Kt}{\varepsilon ^2}}+\frac{\lambda _K}{2\varepsilon ^2}\int _0^te^{-\frac{\lambda _K}{\varepsilon ^2}\tau }e^{-\frac{\lambda _K}{2\varepsilon ^2}(t-\tau )}d\tau \\&= e^{-\frac{\lambda _Kt}{\varepsilon ^2}}+\frac{\lambda _K}{2\varepsilon ^2}\int _0^te^{-\frac{\lambda _K}{2\varepsilon ^2}(t+\tau )}d\tau = e^{-\frac{\lambda _K}{2\varepsilon ^2}t}. \end{aligned}$$Hence, replacing those terms in (37) yields:

$$\begin{aligned} |r^\varepsilon _Y(t)|\le \gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _K}{2\varepsilon ^2}t}+2\varepsilon ^2\gamma \frac{{C''}}{\lambda _K}+\frac{C\gamma }{\varepsilon ^2}|r^\varepsilon _z|*e^{-\frac{\lambda _K}{2\varepsilon ^2}\cdot }(t). \end{aligned}$$(38) -

2.

The next step is to gain similarly some control on \(|r^\varepsilon _z|\). Using Duhamel formula on the equation from (33) on \(r^\varepsilon _z\) gives, for \(t\le \min (T,t^*_\varepsilon )\):

$$\begin{aligned} r^\varepsilon _z(t) = r^\varepsilon _z(0)e^{-2gt}+ \left( \left[ F(N^*+r^\varepsilon _Y)-F(N^*)+\varepsilon ^2\nu _{z,\varepsilon }\right] *e^{-2g\cdot }\right) (t), \end{aligned}$$which yields:

$$\begin{aligned} |r^\varepsilon _z(t)| \le |r^\varepsilon _z(0)|e^{-2gt}+\varepsilon ^2\frac{\Vert \nu _{z,\varepsilon }\Vert _\infty }{2g}+\Vert \partial _{\bar{Y}} F\Vert _ {\infty ,\Pi _\Omega (\bar{K}_{\delta _K})}\left( |r^\varepsilon _Y|*e^{-2g\cdot }\right) (t). \end{aligned}$$Hence:

$$\begin{aligned} |r^\varepsilon _z(t)| \le |r^\varepsilon _z(0)|e^{-2gt}+\varepsilon ^2\frac{\Vert \nu _{z,\varepsilon }\Vert _\infty }{2g}+C\left( |r^\varepsilon _Y|*e^{-2g\cdot }\right) (t). \end{aligned}$$(39)At that point, it is clear that it is sufficient to control \(|r^\varepsilon _Y|\) and \(|r^\varepsilon _z(0)|\) in order to control \(|r^\varepsilon _z(t)|\) for sufficiently small \(\varepsilon \).

-

3.

Plugging the latter in (38) gives:

$$\begin{aligned}&|r^\varepsilon _Y(t)|\le \gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _K}{2\varepsilon ^2}t}+\frac{C\gamma }{\varepsilon ^2}|r^\varepsilon _z(0)|\left( e^{-2g\cdot } *e^{-\frac{\lambda _K}{2\varepsilon ^2}\cdot }\right) (t)+\varepsilon ^2\frac{C\gamma \Vert \nu _{z,\varepsilon }\Vert _\infty }{\lambda _K g}\nonumber \\&\quad +2\varepsilon ^2\gamma \frac{{C''}}{\lambda _K}+\frac{\gamma C^2}{\varepsilon ^2}\left[ |r^\varepsilon _Y|*\left( e^{-2g\cdot }*e^{-\frac{\lambda _K}{2\varepsilon ^2}\cdot } \right) \right] (t). \end{aligned}$$(40)Similarly as the computation above, we have, for \(\varepsilon ^2<\min (\frac{\lambda _K}{8g},1)\) and \(t\ge 0\):

$$\begin{aligned} e^{-2g\cdot }*e^{-\frac{\lambda _K}{2\varepsilon ^2}\cdot }(t) = \frac{1}{\frac{\lambda _K}{2\varepsilon ^2}-2g}\left( e^{-2gt}-e^{-\frac{\lambda _K}{2\varepsilon ^2}t}\right) \le \frac{4\varepsilon ^2}{\lambda _K}e^{-2gt}. \end{aligned}$$Hence, for \(\varepsilon ^2<\min (\frac{\lambda _K}{8g},1)\), we get from (40):

$$\begin{aligned} |r^\varepsilon _Y(t)|&\le \gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _K}{2\varepsilon ^2}t}+\frac{2\gamma C}{\lambda _K}|r^\varepsilon _z(0)|e^{-2gt}+\varepsilon ^2\frac{C\gamma \Vert \nu _{z,\varepsilon }\Vert _\infty }{\lambda _K g}+2\varepsilon ^2\gamma \frac{{C''}}{\lambda _K}\\ {}&\quad +\frac{2\gamma C^2}{\lambda _K}\left( |r^\varepsilon _Y|*e^{-2g\cdot } \right) (t)\\&\le C^\varepsilon _0(t) +\frac{2\gamma C^2}{\lambda _K}\left( |r^\varepsilon _Y|*e^{-2g\cdot }\right) (t), \end{aligned}$$where we define: \(C^\varepsilon _0(t):=\gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _K}{2\varepsilon ^2}t}+\frac{2\gamma C}{\lambda _K}|r^\varepsilon _z(0)|e^{-2gt}+\varepsilon ^2\frac{C\gamma \Vert \nu _{z,\varepsilon }\Vert _\infty }{\lambda _K g}+2\varepsilon ^2\gamma \frac{{C''}}{\lambda _K}\). Using once again Gronwall inequality on \(|r^\varepsilon _Y|e^{2g\cdot }\) yields:

$$\begin{aligned} |r^\varepsilon _Y(t)|\le C^\varepsilon _0(t) +\frac{2\gamma C^2}{\lambda _K}\left( C^\varepsilon _0*e^{\left( -2g+\frac{2\gamma C^2}{\lambda _K}\right) \cdot }\right) (t). \end{aligned}$$(41)Recalling that:

$$\begin{aligned} C^\varepsilon _0(t) = \gamma |r^\varepsilon _Y(0)|e^{-\frac{\lambda _K}{2\varepsilon ^2}t}+\frac{2\gamma C}{\lambda _K}|r^\varepsilon _z(0)|e^{-2gt}+\varepsilon ^2\frac{C\gamma \Vert \nu _{z,\varepsilon }\Vert _\infty }{\lambda _K g}+2\varepsilon ^2\gamma \frac{{C''}}{\lambda _K}, \end{aligned}$$we get that, thanks to (41) and (39), for a given \(0<\delta <\Delta \), there exists \(\eta _\delta >0\) depending only on \(\delta ,g,m,K,t^*,F,G,\Vert \nu _{z,\varepsilon }\Vert _\infty \) such that :

$$\begin{aligned} \forall (\varepsilon ,|r^\varepsilon _Y(0)|,|r^\varepsilon _z(0)|) \in [0,\eta _\delta ]^3, \underset{t\le \min (T,t^*_\varepsilon )}{\max }|r^\varepsilon _Y(t)|+|r^\varepsilon _z(t)|\le \delta . \end{aligned}$$Recalling that \(t^*_\varepsilon = \min \left( t^*,\inf \{t>0, |r^\varepsilon _z|+|r^\varepsilon _Y|> \Delta \}\right) \), we get that \(T\le t^*_\varepsilon \), for \(\delta <\Delta \) and \((\varepsilon ,|r^\varepsilon _Y(0)|,|r^\varepsilon _z(0)|) \in [0,\eta _\delta ]^3\). Consequently, the convergence is uniform on [0, T]. \(\square \)

Proof

(Proof of Theorem 3.1) One can notice that the control obtained in the proof of Proposition 1 can be applied on any time interval \([a,a+T]\) with \(a\in [0,t^*-T]\), provided that \((\varepsilon ,|r^\varepsilon _Y(a)|,|r^\varepsilon _z(a)|)\) are small enough. Therefore, we can reiterate the control a finite number of times on the intervals \([jT,\min \{(j+1)T,t^*\}]\) with \(\forall j \le \lfloor \frac{t^*}{T}\rfloor \). Hence, the uniform convergence on \([0,t^*]\). \(\square \)

Proof

(Proof of Lemma 11) Recall that for all \( z \in K\), \(J_z\) has real negative eigenvalues, uniformly bounded over K by \(-2\lambda _K<-\lambda _K\). Let us define, for \(z\in K\):

For all \(z\in \mathbb {K}\), \(f_{\lambda _K,z}\) is continuous. Moreover, Theorem 2.34 of Chicone (1999) ensures that \(f_{l,z}\) is bounded for all \(l<2\lambda _K\).

We can thus define :

Let us show that \(\Gamma _{\lambda _K}\) is a continuous function. Let \(z_0\in K\) and \(\varepsilon >0\).

One can first notice that, for \(s\ge 0\):

Thus, \(f_{\lambda _K,z}\) vanishes when s goes to infinity. As a consequence, there exists \(s_0 \ge 0\) such that:

Furthermore, for \(l\in ]\lambda _K,2\lambda _K[\), we have:

We can therefore choose \(l\in ]\lambda _K,2\lambda _K[\) such that \(\Gamma _{\lambda _K}(z_0) \le \Gamma _{l}(z_0) \le \Gamma _{\lambda _K}(z_0)+\varepsilon \).

As \(z\mapsto J_z\) is a continuous function, there exists \(\delta >0\) that ensures that for if \(z\in K\) and \(|z-z_0|\le \delta \), then:

Let us consider such a z.

As \(e^{J_z s}\) is solution of the ODE : \(y'=J_{z_0}y+(J_{z}-J_{z_0})y\), we obtain, for \(s\ge 0\):

Hence :

From applying Gronwall’s inequality to \(t\mapsto {\left| \left| \left| e^{J_z s} \right| \right| \right| }e^{ls}\), it comes that, for \(s\ge 0\):

Hence:

Moreover, recall that \(t_0\) was defined so that :

Then, by continuity of \(z\mapsto e^{J_z s_0}\), there exists \(\delta '>0\) that ensures that for \(|z-z_0|\le \delta '\), we have:

Hence:

In conclusion, if \(|z-z_0| \le \min (\delta ,\delta ')\), then \(|\Gamma _{\lambda _K}(z)-\Gamma _{\lambda _K}(z_0)| \le \varepsilon \). Hence \(\Gamma _{\lambda _K}\) is continuous over K. Furthermore, as K is a compact set, \(\Gamma _{\lambda _K}\) is bounded, by \(\gamma \). \(\square \)

F Proof of Proposition 3.1

This appendix is dedicated to the proof of Proposition 3.1.

Proof

Let \((g,m,z^*)\in \mathbb {R}^*_+\times \mathbb {R}^*_+\times \mathbb {R}_+\) be such that \(P_{z^*}\) has a single positive root. From Lemma 1, this root defines a fast equilibrium if it is greater than \(f_1(z^*)\). From Lemma 2, that is the case if and only if \(f_1(z^*)\) is negative or \(P_{z^*}(f_1(z^*))\) is negative.

First, regarding the sign of \(f_1(z^*)\), we have:

which requires that \(m<1\). If \(m<1\) then:

which requires that \(m+g< 1\). Hence:

Next, regarding the sign of \(P_{z^*}(f_1(z^*))\), we compute:

Let us define:

\(z_1,z_2\) its two roots and \(\Delta = \frac{4}{g^2}\left[ m^2-4g\,(m-1)\right] \) its discriminant. From the computation above,

We have:

and:

Consequently:

- \(\diamond \):

-

if \(g\ge 1\), then \(2m+g-1>0\) and then \(z_1z_2\ge 0\). If, additionally, \(m<2g\left( 1-\sqrt{1-\frac{1}{g}}\right) \), then \(m< 2 \le g+1\) (\(g\mapsto 2g-2\sqrt{g^2-g}\) is decreasing on \([1,+\infty [\)). Therefore, we get: \(z_1+z_2> 0\) and thus, \(z_2>0\) and \(z_1\ge 0\). At last, if \(m>2g\left( 1+\sqrt{1-\frac{1}{g}}\right) \), then \(m>2\,g\ge g+1\), which implies \(z_1+z_2<0\) and thus \(z_1< 0, z_2\le 0\).

- \(\diamond \):

-

if \(g< 1\), then \(z_1+z_2\ge 0\) if and only if \(m\le g+1\) and \(z_1z_2\ge 0\) if and only if \(m\le \frac{1-g}{2}\) (which is lower than \(g+1\)).

Hence the result. \(\square \)

G Proof of Lemma 9

This section is dedicated to proving Lemma 9, which concludes the proof of Proposition 4.2.

Proof

(Proof of Lemma 9)

Let \((m,g)\in {\mathbb {R}_+^*}^2\) verify (25). Then, from the first part of the proof of Proposition 4.2, there exists a unique \(\rho ^*>0\) that is solution of the equation in (23). Let us define \(N_1^*\) and \(N_2^*\) such as in (26). Then we have: \(0<\rho ^* = \frac{N_2^*}{N_1^*}\). Thus:

Borrowing once again the notations: \(a = \frac{4g}{m}\), \(b=\frac{1}{m}\) and \(y^*=\rho ^*+\frac{1}{\rho ^*}\) (unique root of S larger than 2), (26) leads to:

As \(S({y^*}) = 0\), we get:

This is always true whenever \(m \le \frac{1}{2}\). Otherwise, let us suppose henceforth that \(2m>1\). The condition above is equivalent to:

Let us show that: \(c+\sqrt{c^2+2c}\ge 2\). It is sufficient to show that: \(c\ge \frac{2}{3}\), which is equivalent to having: \(3g+1\ge 2m\). In this proof, we are considering \((m,g) \in {\mathbb {R}_+^*}^2\) such that \(1+2m<5g\) and \(4g\,(m-1)<m^2\). Let us show that such pairs verify \(3g+1\ge 2m\):

- \(\diamond \):

-

if \(g\le 1\), then \(m < \frac{5g-1}{2} \le \frac{3g+1}{2}\).

- \(\diamond \):

-

if \(g\ge 1\), then \(m<2g-2\sqrt{g^2-g}\) which is a decreasing function on \([1,+\infty [\), which takes the value 2 when \(g=1\). Hence it is always dominated by \(g\mapsto \frac{3g+1}{2}\) on this interval.

Hence \(c+\sqrt{c^2+2c}\ge \frac{2}{3}+\sqrt{\frac{4}{9}+\frac{4}{3}}=2\). Therefore, as \(y^*\) is the only root of S greater than 2, we get the following equivalence:

The rest of the proof is dedicated to examine the conditions on (m, g) under which:

Let us set \(Q:=\sqrt{c^2+2c} = \sqrt{4g\,\frac{g+2m-1}{(2m-1)^2}}\). Tedious computations done with the help of Mathematica show that: \(S(c) = Q^2\left[ \frac{g(4-6m)+(2m-1)^2}{{m}\,(2m-1)}\right] \), and we next compute:

Hence:

Let us study different cases corresponding to different ranges of value of \(m>\frac{1}{2}\).

If \(\underline{m=1}\), then the last line is equivalent to :

which is true for all \(g>0\).

If \(\underline{\frac{1}{2}<m<1}\), then:

and:

Hence, for all g such that \(1+2m<5g\) and \(m^2>4g\,(m-1)\):

If \(\underline{m>1}\), then:

Hence, if: \(4g\,(m-1)+2 m^2-5 m+2<0\), then, for all g such that \(1+2m<5g\) and \(m^2>4g\,(m-1)\):

Otherwise, if \(4g\,(m-1)+2 m^2-5 m+2\ge 0\), then:

Let us note \(x:=\frac{2m-1}{g}\). Then, the latter is equivalent to:

\(\square \)

H Details of the numerical analysis carried out in Sect. 2

Domains We consider a bounded trait domain \([-\varvec{z_{\max }},\varvec{z_{\max }}]\), discretised in a mesh \(\left( \varvec{z}_k\right) _{0\le k < K}\) (K odd) with regard to the step length \(\delta z>0\), and a time domain \([0,\varvec{T_{\max }}]\), discretised in a mesh \(\left( \varvec{t}^l\right) _{0\le l< L}\) with regard to the step length \(\delta t>0\). In the the simulations involved in Fig. 2, we use the following values for the parameters:

Scheme For \(i\in \{1,2\}, 0\le l <L\), we approximate the trait distributions \(\varvec{n_i}(\varvec{t}^l,\cdot )\) by \(\left( \varvec{n}_{i,k}^l\right) _{0\le k<K}\) with the following semi-implicit scheme:

where \(\varvec{N}_i^{l} = \sum _{k=0}^{K-2} \varvec{n}_{i,k}^l\,\delta z\) and \(\varvec{B}_{i,k}^l\) is a discretisation of the reproduction operator \(\varvec{\mathcal {B}_\sigma }(\varvec{n_i}(t^l,z_k)\). In the next paragraph, we detail how we compute \(\left( \varvec{B}_{i,k}^l\right) _{0\le k<K}\).

We approximate the system of moments of Ronce and Kirkpatrick (2001) following a similar semi-implicit scheme.

Discretization of the reproduction operator The discretization of the reproduction operator is in accordance with the double convolution form shown in Lemma 10, as it increases greatly the computational speed in comparison to a double loop. However, the half-arguments involved in Lemma 10 calls for a special attention to the meshes involved.

Let us define two auxiliary trait meshes

-

1.

\(\left( \varvec{\tilde{z}}_{k'}\right) _{0\le k' < 2K-1}\) on \([-\varvec{z_{\max }},\varvec{z_{\max }}]\), with step length \(\frac{\delta z}{2}\),

-

2.

\(\left( \varvec{\hat{z}}_{k''}\right) _{0\le k'' < 4K-3}\) on \([-2\varvec{z_{\max }},2\varvec{z_{\max }}]\), with step length \(\frac{\delta z}{2}\).

We define the vector \(\left( G_{k'}\right) _{0\le k' < 2K-1}\) discretising the Gaussian kernel involved in our reproduction operator on the trait grid \(\left( \varvec{\tilde{z}}_{k'}\right) _{0\le k' < 2K-1}\):

We next define the vector \(\left( \hat{B}_{i,k''}^l\right) _{0\le k''<4K-3}\) resulting from the following double discrete convolution (denoted \(*\)):

We use a convolution algorithm with default settings: the size of the output is the sum of entry vector sizes minus one, and out of bounds index entries are extrapolated as 0. A straight-forward computation shows that \(\left( \hat{B}_{i,k''}^l\right) _{0\le k''<4K-3}\) is the approximation of the reproduction operator on the mesh \(\left( \varvec{\hat{z}}_{k''}\right) _{0\le k'' < 4K-3}\):

Thus, we interpolate \(\left( \hat{B}^l_{i,k''}\right) _{0\le k''<4K-3}\) at the entries corresponding to \(\left( \varvec{z}_k\right) _{0\le k < K}\) to obtain \(\left( \varvec{B}_{i,k}^l\right) _{0\le k<K}\).

I Numerical outcomes details: Figs. 6 and 7

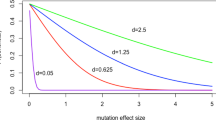

Numerical setting The lower panel of Fig. 6 has been produced by running 3600 simulations, one for each couple of migration rate \(m \in [0.01,\,3]\) and intensity of selection \(g\in [0.01,\,3]\), for \(t\le T_{max} \in \left[ \frac{300}{\varepsilon ^2},\frac{600}{\varepsilon ^2}\right] \), with a criteria to cut the simulation short at a time greater than \(\frac{300}{\varepsilon ^2}\) if the difference between two consecutive steps is small enough. The value of the other parameters are the same for each simulation: \(r = 1, \theta = 1, \kappa = 1, \varepsilon = 0.05\), as well as the initial state:

The initial state is taken as monomorphic, as the aim of this figure is to be compared to the theoretical outcomes that are predicted within the scope of the slow-fast analysis as stated in Theorem 3.1 (so when the initial state is close enough from the slow manifold).

Scoring Each simulation final state \((n_1^f,n_2^f)\) is attributed a score between 0 and 1 according to the following scheme:

-

1.

If \(\max \left( N_1^f,N_2^f\right) <0.01\), then the score is 0 (for extinction) and is corresponding to the deep purple color. Else, the score is a positive number (lower than 1) according to what follows.

-

2.

If the variance in trait of the metapopulation is greater than \(2\,\varepsilon ^2\), the score is 1 (corresponding to the color yellow). This would be the case if the final state is dimorphic, but more generally, this is to highlight the simulations whose final state does not fall in the small segregational variance regime analysis prediction (which in particular predicts that the distribution of trait in the metapopulation is monomorphic (see Sect. 3), with a variance of order \(\varepsilon ^2\) (see (12)).

-

3.

If both conditions above are not met, then the score S is given according to the following formula:

$$\begin{aligned} S = \frac{5}{6} - \frac{1}{3}\frac{\left| N_2^f-N_1^f\right| }{N_1^f+N_2^f}. \end{aligned}$$This formula discriminates between symmetrical equilibria (which are characterized by equal population sizes, see Proposition 4.1), which typically have a score of \(\frac{5}{6}\) (corresponding to the color light green), and asymmetrical equilibria, which have a discrepancy in local population sizes and therefore have a typically much lower score (in the blue tones).

Adjustments for Fig. 7 The methodology is the same for the lower panel of Fig. 6 and both panels of Fig. 7, at the exception of the initial state, set as:

and of the time step for the lower panel of Fig. 7, which is refined to keep up with the smaller value of \(\varepsilon ^2\).

Rights and permissions

About this article

Cite this article

Dekens, L. Evolutionary dynamics of complex traits in sexual populations in a heterogeneous environment: how normal?. J. Math. Biol. 84, 15 (2022). https://doi.org/10.1007/s00285-021-01712-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00285-021-01712-0

Keywords

- Quantitative genetic model

- Heterogeneous environment

- Asymptotic analysis

- Infinitesimal model

- Sexual reproduction